Eliezer suggests that increased economic growth is likely bad for the world, as it should speed up AI progress relative to work on AI safety. He reasons that this should happen because safety work is probably more dependent on insights building upon one another than AI work is in general. Thus work on safety should parallelize less well than work on AI, so should be at a disadvantage in a faster paced economy. Also, unfriendly AI should benefit more from brute computational power than friendly AI. He explains,

“Both of these imply, so far as I can tell, that slower economic growth is good news for FAI; it lengthens the deadline to UFAI and gives us more time to get the job done. “…

“Roughly, it seems to me like higher economic growth speeds up time and this is not a good thing. I wish I had more time, not less, in which to work on FAI; I would prefer worlds in which this research can proceed at a relatively less frenzied pace and still succeed, worlds in which the default timelines to UFAI terminate in 2055 instead of 2035.”

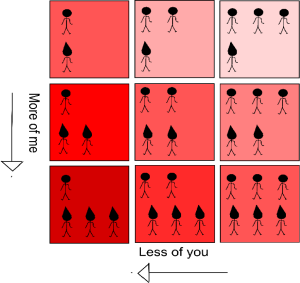

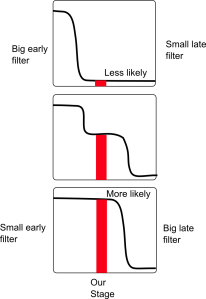

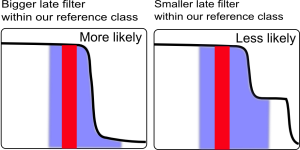

I’m sympathetic to others‘ criticisms of this argument, but would like to point out a more basic problem, granting all other assumptions. As far as I can tell, the effect of economic growth on parallelization should go the other way. Economic progress should make work in a given area less parallel, relatively helping those projects that do not parallelize well.

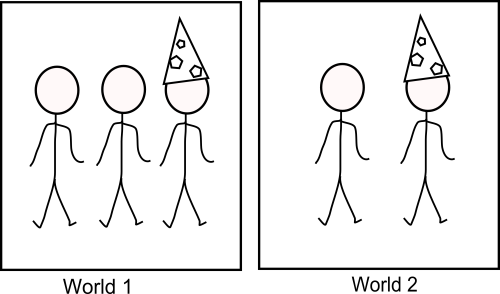

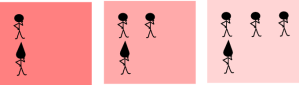

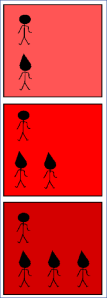

Economic growth, without substantial population growth, means that each person is doing more work in their life. This means the work that would have otherwise been done by a number of people can be done by a single person, in sequence. The number of AI researchers at a given time shouldn’t obviously change much if the economy overall is more productive. But each AI researcher will have effectively lived and worked for longer, before they are replaced by a different person starting off again ignorant. If you think research is better done by a small number of people working for a long time than a lot of people doing a little bit each, economic growth seems like a good thing.

On this view, economic growth is not like speeding up time – it is like speeding up how fast you can do things, which is like slowing down time. Robotic cars and more efficient coffee lids alike mean researchers (and everyone else) have more hours per day to do things other than navigate traffic and lid their coffee. I expect economic growth seems like speeding up time if you imagine it speeding up others’ abilities to do things and forget it also speeds up yours. Or alternatively if you think it speeds up some things everyone does, without speeding up some important things, such as people’s abilities to think and prepare. But that seems not obviously true, and would anyway be another argument.