Image via Wikipedia

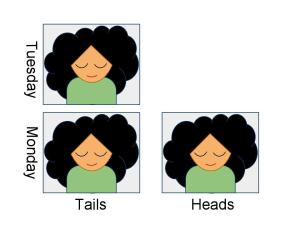

Consider the Sleeping Beauty Problem. Sleeping Beauty is put to sleep on Sunday night. A coin is tossed. If it lands heads, she is awoken once on Monday, then sleeps until the end of the experiment. If it lands tails, she is woken once on Monday, drugged to remove her memory of this event, then awoken once on Tuesday, before sleeping till the end of the experiment. The awakenings during the experiment are indistinguishable to Beauty, so when she awakens she doesn’t know what day it is or how the coin fell. The question is this: when Beauty wakes up on one of these occasions, how confident should she be that heads came up?

There are two popular answers, 1/2 and 1/3. However virtually everyone agrees that if Sleeping Beauty should learn that it is Monday, her credence in Tails should be reduced by half, from whatever it was initially. So ‘Halfers’ come to think heads has a 2/3 chance, and ‘Thirders’ come to think they heads is as likely as tails. This is the standard Bayesian way to update, and is pretty uncontroversial.

There are two popular answers, 1/2 and 1/3. However virtually everyone agrees that if Sleeping Beauty should learn that it is Monday, her credence in Tails should be reduced by half, from whatever it was initially. So ‘Halfers’ come to think heads has a 2/3 chance, and ‘Thirders’ come to think they heads is as likely as tails. This is the standard Bayesian way to update, and is pretty uncontroversial.

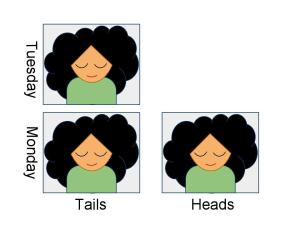

Now consider a variation on the Sleeping Beauty Problem where Sleeping Beauty will be woken up one million times on tails and only once on heads. Again, the probability you initially put on heads is determined by the reasoning principle you use, but the probability shift if you are to learn that you are in the first awakening will be the same either way. You will have to shift your odds by a million to one toward heads. Nick Bostrom points out that in this scenario, either before or after this shift you will have to be extremely certain either of heads or of tails, and that such extreme certainty seems intuitively unjustified, either before or after knowing you are experiencing the first wakening.

Extreme Sleeping Beauty wakes up a million times on tails or once on heads. There is no choice of 'a' which doesn't lead to extreme certainty either before or after knowing she is at her first waking.

However the only alternative to this certainty is for Sleeping Beauty to keep odds near 1:1 both before and after she learns she is at her first waking. This entails apparently giving up Bayesian conditionalization. Having excluded 99.9999% of the situations she may have been in where tails would have come up, Sleeping Beauty retains her previous credence in tails.

This is what Nick proposes doing however: his ‘hybrid model’ of Sleeping Beauty. He argues that this does not violate Bayesian conditionalization in cases such as this because Sleeping Beauty is in different indexical positions before and after knowing that she is at her first waking, so her observer-moments (thick time-slices of a person) at the different times need not agree.

I disagree, as I shall explain. Briefly, the disagreement between different observer-moments should not occur and is deeper than it first seems, the existing arguments against so called non-indexical conditioning also fall against the hybrid model, and Nick fails in his effort to show that Beauty won’t predictably lose money gambling.

Is hybrid Beauty Bayesian?

Nick argues first that a Bayesian may accept having 50:50 credences both before and after knowing that it is Monday, then claims that one should do so, given the absurdities of the Extreme Sleeping Beauty problem above and variants of it. His argument for the first part is as follows (or see p10). There are actually five rather than three relevant indexical positions in the Sleeping Beauty Problem. The extra two are Sleeping Beauty after she knows it is Monday under both heads and tails. He explains that it is the ignorant Beauties who should think the chance of Heads is half, and the informed Mondayers who should think the chance of Heads is still half conditional on it being Monday. Since these are observer-moments in different locations, he claims there is no inconsistency, and Bayesian conditionalization is upheld (presumably meaning that each observer-moment has a self-consistent set of beliefs).

He generalizes that one need not believe P(X) = A, just because one used to think P(X|E) = A and one just learned E. For that to be true the probability of X given that you don’t know E but will learn it would have to be equal to the probability of X given that you do know E but previously did not. Basically, conditional probabilities must not suddenly change just as you learn the conditions hold.

Why exactly a conditional probability might do this is left to the reader’s imagination. In this case Nick infers that it must have happened somehow, as no apparently consistent set of beliefs will save us from making strong updates in the Extreme Sleeping Beauty case and variations on it.

If receiving new evidence gives one leave to break consistency with any previous beliefs on grounds that ones conditional credences may have changed with ones location, there would be little left of Bayesian conditioning in practice. Normal Bayesian conditioning is remarkably successful then, if we are to learn that a huge range of other inferences were equally well supported in any case of its use.

Nick’s calling Beauty’s unchanging belief in even odds consistent for a Bayesian is not because these beliefs meet some sort of Bayesian constraint, but because he is assuming there are not constraints on the relationship between the beliefs of different Bayesian observer-moments. By this reasoning, any set of internally consistent belief sets can be ‘Bayesian’. In the present case we chose our beliefs by a powerful disinclination toward making certain updates. We should admit it is this intuition driving our probability assignments then, and not call it a variant of Bayesianism. And once we have stopped calling it Bayesianism, we must ask if the intuitions that motivate it really have the force behind them that the intuitions supporting Bayesianism in temporally extended people do.

Should observer-moments disagree?

Nick’s argument works by distinguishing every part of Beauty with different information as a different observer. This is used to allow them to safely hold inconsistent beliefs with one another. So this argument is defeated if Bayesians should agree with one another, when they know one anothers’ posteriors, share priors and know one another to be rational. Aumann‘s agreement theorem does indeed show this. There is a slight complication in that the disagreement is over probabilities conditional on different locations, but the locations are related in a known way, so it appears they can be converted to disagreement over the same question. For instance past Beauty has a belief about the probability of heads conditional on her being followed by a Beauty who knows it is Monday, and Future Beauty has a belief conditional on the Beauty in her past being followed by one who knows it is Monday (which she now knows it was).

Intuitively, there is still only one truth, and consistency is a tool for approaching it. Dividing people into a lot of disagreeing parts so that they are consistent by some definition is like paying someone to walk your pedometer in order to get fit.

Consider the disagreement between observer-moments in more detail. For instance, suppose before Sleeping Beauty knows what day it is she assigns 50 percent probability to heads having landed. Suppose she then learns that it is Monday, and still believes she has a 50 percent chance of heads. Lets call the ignorant observer-moment Amy and the later moment who knows it is Monday Betty.

Amy and Betty do not merely come to different conclusions with different indexical information. Betty believes Amy was wrong, given only the information Amy had. Amy thought that conditional on being followed by an observer-moment who knew it was Monday, the chances of Heads were 2/3. Betty knows this, and knows nothing else except that Amy was indeed followed by an observer-moment who knows it is Monday, yet believes the chances of heads are in fact half. Betty agrees with the reasoning principle Amy used. She also agrees with Amy’s priors. She agrees that were she in Amy’s position, she would have the same beliefs Amy has. Betty also knows that though her location in the world has changed, she is in the same objective world as Amy – either Heads or Tails came up for both of them. Yet Betty must knowingly disagree with Amy about how likely that world is to be one where Heads landed. Neither Betty nor Amy can argue that her belief about their shared world is more likely to be correct than the other’s. If this principle is even a step in the right direction then, these observer-moments could do better by aggregating their apparently messy estimates of reality.

Identity with other unlikely anthropic principles

Though I don’t think Nick mentions it, the hybrid model reasoning is structurally identical to SSSA using the reference class of ‘people with exactly one’s current experience’, both before and after receiving evidence (different reference classes in each case since they have different information). In both cases every member of Sleeping Beauty’s reference class shares the same experience. This means the proportion of her reference class who share her current experiences is always one. This allows Sleeping Beauty to stick with the fifty percent chance given by the coin, both before and after knowing she is in her first waking, without any interference from changing potential locations.

SSSA with such a narrow reference class is exactly analogous to non-indexical conditioning, where ‘I observe X’ is interpreted as ‘X is observed by someone in the world’. Under both, possible worlds where your experience occurs nowhere are excluded and all other worlds retain their prior probablities, normalized. Nick has criticised non-indexical conditioning because it leads to an inability to update on most evidence, thus prohibiting science for instance. Since most people are quite confident that it is possible to do science, they are implicitly confident that non-indexical conditioning is well off the mark. This implies that SSSA using the narrowest reference class is just as implausible, except that it may be more readily traded for SSSA with other reference classes when it gives unwanted results. Nick has suggested SSA should be used with a broader reference class for this reason (e.g. see Anthropic Bias p181), though he also supports using different reference classes at different times.

These reasoning principles are more appealing in the Extreme Sleeping Beauty case, because our intuition there is to not update on evidence. However if we pick different principles for different circumstances according to which conclusions suit us, we aren’t using those principles, we are using our intuitions. There isn’t necessarily anything inherently wrong with using intuitions, but when there are reasoning principles available that have been supported by a mesh of intuitively correct reasoning and experience, a single untested intuition would seem to need some very strong backing to compete.

Beauty will be terrible at gambling

It first seems that Hybrid Beauty can be Dutch-Booked (offered a collection of bets she would accept and which would lead to certain loss for her), which suggests she is being irrational. Nick gives an example:

Upon awakening, on both Monday and Tuesday,

before either knows what day it is, the bookie offers Beauty the following bet:

Beauty gets $10 if HEADS and MONDAY.

Beauty pays $20 if TAILS and MONDAY.

(If TUESDAY, then no money changes hands.)

On Monday, after both the bookie and Beauty have been informed that it is

Monday, the bookie offers Beauty a further bet:

Beauty gets $15 if TAILS.

Beauty pays $15 if HEADS.

If Beauty accepts these bets, she will emerge $5 poorer.

Nick argues that Sleeping Beauty should not accept the first bet, because the bet will have to be made twice if tails comes up and only once if heads does, so that Sleeping Beauty isn’t informed about which waking she is in by whether she is offered a bet. It is known that when a bet on A vs. B will be made more times conditional on A than conditional on B, it can be irrational to bet according to the odds you assign to A vs. B. Nick illustrates:

…suppose you assign credence 9/10 to the proposition that the trillionth digit in the decimal expansion of π is some number other than 7. A man from the city wants to bet against you: he says he has a gut feeling that the digit is number 7, and he offers you even odds – a dollar for a dollar. Seems fine, but there is a catch: if the digit is number 7, then you will have to repeat exactly the same bet with him one hundred times; otherwise there will just be one bet. If this proviso is specified in the contract, the real bet that is being offered you is one where you get $1 if the digit is not 7 and you lose $100 if it is 7.

However in these cases the problem stems from the bet being paid out many times under one circumstance. Making extra bets that will never be paid out cannot affect the value of a set of bets. Imagine the aforementioned city man offered his deal, but added that all the bets other than your first one would be called off once you had made your first one. You would be in the same situation as if the bet had not included his catch to begin with. It would be an ordinary bet, and you should be willing to bet at the obvious odds. The same goes for Sleeping Beauty.

We can see this more generally. Suppose E(x) is the expected value of x, P(Si) is probability of situation i arising, and V(i) is the value to you if it arises. A bet consists of a set of gains or losses to you assigned to situations that may arise.

E(bet) = P(S1)*V(S1) + P(S2)*V(S2) + …

The City Man’s offered bet is bad because it has a large number of terms with negative value and relatively high probability, since they occur together rather than being mutually exclusive in the usual fashion. It is a trick because it is presented at first as if there were only one term with negative value.

Where bets will be written off in certain situations, V(Si) is zero in the terms corresponding to those situations, so the whole terms are also zero, and may as well not exist. This means the first bet Sleeping Beauty is offered in her Dutch-booking test should be made at the same odds as if she would only bet once on either coin outcome. Thus she should take the bet, and will be Dutch booked.

Conclusion

In sum, Nick’s hybrid model is not a new kind of Bayesian updating, but use of a supposed loophole where Bayesianism is supposed to have few requirements. There doesn’t even seem to be a loophole there however, and if there were it would be a huge impediment to most practical uses of updating. Reasoning principles which are arguably identical to the hybrid model in the relevant ways have been previously discarded by most due to their obstruction of science among other things. Last, Sleeping Beauty really will lose bets if she adopts the hybrid model and is otherwise sensible.